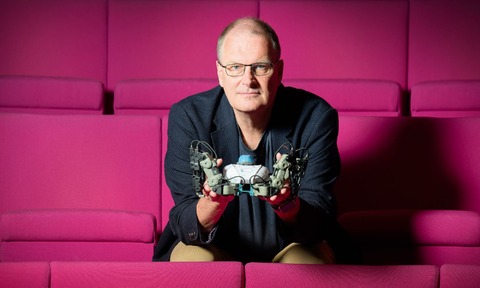

Royal Institution lecturer Professor Mike Wooldridge expands on the scientific and ethical dimensions of artificial intelligence, its potential benefits and, of course, those possible pitfalls.

As an expert in your field, talking to an audience of young digital natives at the Ri Christmas Lectures, what is the essence of your address to them?

Well, as you’ve rightly identified in the question, the young audience in the Royal Institution Theatre is likely to be much more familiar with AI than many people of my generation. Young people of that age have never known a world without AI and they will use it so much more intuitively as a result. Yet my experience is that they share many similar concerns or fears to adults – will AI take jobs, how we can control rapid development, and ethical issues, were all strong themes at the Ri’s recent Youth Summit for example.

So the key thing I’d like to do through the Christmas Lectures is to demystify AI, demonstrating to the audience, to anyone watching regardless of age, how it works, and the many, many ways in which we’re already using it every day, without really realising it. Once you look under the hood, you realise that you’re not dealing with a mind, or consciousness, but rather with a tool, just like a calculator or a laptop. And tools are what we’ve used since the Stone Age, to make everyday tasks easier, and understanding that makes the rapid advance of AI a lot less daunting.

Data capture is vital to the development of AI. Do you expect your audience to go on to develop very different concepts of privacy and confidentiality rights?

From my perspective it seems that younger generations already have a different understanding or approach to privacy and confidentiality; at once much more aware of their right to privacy but seemingly less concerned with taking precautions to maintain it. But it’s certainly true that the development of AI raises new questions about privacy and confidentiality; that it’s been trained on our social media feeds for example, that the data it relies on is hidden in neural networks with no obvious ‘database’ that you can point to and say ‘this is where information about Mike is stored’.

We haven’t had to deal with these questions before, and as a society we need to get to grips with them. But a starting point is understanding, so to return to my aim to demystify AI technology through the Christmas Lectures, I know, for example, that nothing we post on social media is off limits for AI learning and I want to make sure as many people as possible also know that.

As we can interrogate AI, could we soon arrive at a time where it becomes commonplace for AI to explicitly/verbally interrogate us in order to develop its ‘understanding’?

Yes, I think that’s a very real possibility; and in fact, we’re starting to see it already. Even ChatGPT can ask us questions and of course large language models like ChatGPT use all online content to learn, whether it’s human or AI generated. So there’s that infamous example of a company’s employees using LLM to write a company strategy, only to see that same strategy available to their competitors a few days later.

Some people are worried that we’ll inadvertently end up with AI that manipulates us and although I don’t know how realistic that is, I can see how AI might routinely review and critique our written outputs in the workplace – for example, emails and such like – so I’d say it’s realistic enough to give it due consideration.

What significance might social choice theory and Explainable AI have in designing approaches to ensure ethical decisionmaking, accountability, transparency and the avoidance of bias or untruths?

I think ethics and explainable AI have a significant role to play, but there’s no absolutely perfect system or output, in any area of scientific advance, for the simple reason that humans are involved and as hard as we try, we’re flawed.

Once you look under the hood, you realise that you’re not dealing with a mind, or consciousness, but rather with a tool, just like a calculator or a laptop. And tools are what we’ve used since the Stone Age

There’s a dream that machines can be designed to be perfectly ethical and free of biases and prejudices that plague human society, but without being unduly ‘doom and gloom’ about it, unfortunately, a dream is all it is. AI can be a powerful tool to help us make better decisions or to achieve our goals more quickly or efficiently, but I strongly believe the ethical buck stops with the human being.

If an individual deploys an AI system that harms somebody, the fault is that individual’s not the AI. That will be the focus of the third Christmas Lecture – what the future might hold including the ethical questions we need to address collectively.

How might quantum computing impact the development of AI?

We don’t really understand what the broader impact of quantum computing is going to be or if it will really work at scale. But if it does, then it changes everything, not just AI. Lots of things in computing that we take for granted – such as having trustworthy cryptography – will need to be rethought. And then it would have a massive impact on AI as well.

Lots of problems that we currently think of as being very hard would be rendered trivial, because – so the theory goes – quantum computers would be immensely more powerful than conventional computers.

In truth, we don’t know where quantum computing is heading. But if it really works, then it is just as important as AI.

Predictive modelling, drug discovery, personalised medicine and experiment design are among the many areas in which the lab can gain from AI. Which has been the greatest beneficiary and where do you expect to see the most development in future?

The healthcare setting is one of the places where AI is already having a considerable impact, and there are many notable successes. For example, AI in wearable technology which may offer early indications of the onset of a range of diseases and other medical conditions. But the single most striking example that I’ve come across is AlphaFold – an open access AI system that predicts a protein’s 3D structure from its amino acid sequence – which is having an enormous positive impact on researchers in the pharmaceutical industry.

This is the real-world application of technology that’s going to save lives – and this technology is just going to get better and better. I’m sure that the young audience for the Christmas Lectures may be more interested in AI applications for gaming, but we are going to focus on the myriad ways in which we’re already using AI on a daily basis in the second Lecture, and we are going to spend a decent amount of time looking at healthcare.

Given the pressures on the National Health Service and the lack of joined-up bureaucracy, how transformational could AI prove?

Over what timescale and cost? Just a single instance of where AI can reduce or remove mundane and time-consuming work tasks – distilling the contents of two reports into a single new one, for example, which many of us are often called upon to do – demonstrates pretty conclusively for me just how transformational AI can be in large organisations or networks. Timescales and the level of investment needed are another matter of course, but the potential is clearly there. I’m not sure whether the young audience of the Christmas Lectures will want o hear about how AI improves productivity, but it will do, and they will feel the benefits when they go into the workplace.

Japan, with its ageing population, has often led in harnessing AI in social care. Should we take a more benevolent view of its likely effects on the psychological and physical well-being of patients and users?

There are multiple applications in assistive technology for elderly people, but the idea of AI companions at the heart of your question is one of the more complicated. Some people think it’s a terrible idea, but others argue it would be cruel to deny lonely or housebound elderly people the comforts of companionship.

Certainly I am of the opinion that we should take a more benevolent view of the potential for human interaction with AI; Hollywood’s portrayal of humanoid AI such as Terminator and the like hasn’t done us any favours. But it’s not an unqualified benevolence.

Because when we interact with AI we’re not interacting with a conscious entity; AI doesn’t have a mind. When you ask a LLM like ChatGPT a question, it’s doing nothing more than predicting what you want to hear based on the prompt you’ve given it. It doesn’t understand the world you’re in, nor how you’re feeling on the day because it’s never experienced either. So the potential ramifications of relying too greatly on advice from AI, for example, are pretty clear. Ultimately, those who understand you best, or understand a problem you’re dealing with, or the mood you’re in, are humans. If you want empathy, then don’t look to a machine.

Revolutionary technologies are highly disruptive in the workplace. Is there a means to manage the transition from one era to another?

Yes, there has to be, but I don’t think that it will be set out by AI researchers alone, and nor is it Government’s problem, nor the business’s, nor the public’s. Rather, all will need to contribute. Certainly we need to be clear-headed about what the risks are and to act to mitigate those at an early stage. No doubt big business will find a way, with the commercial imperative, but can we say that transition will be to the benefit of all? Possibly not.

I am clear though that we have a huge challenge – and a golden opportunity – to make sure that this rapidly moving field of scientific advance, which has such huge potential for the betterment of society, is harnessed for everyone.

Is it realistic to talk about a global approach to AI governance in a polarised world?

It won’t be easy, not least because navigating this space involves the world’s richest companies and the world’s most powerful countries – their interests are not aligned. There are some indicators of what might be possible – agreements on the use of land mines in warfare, for example. So while talking about it may need a heavy dose of pragmatism, it’s important enough to mean that we need to keep talking, and need to push forward.

Do we spend too much time worrying about what AI might become and too little focusing on the potential behaviours of the humans in charge, whether they run large corporations or totalitarian states?

In short yes. I don’t worry about Chat GPT doing bad things, but I do worry about people using it to do bad things. As an AI researcher, it won’t be a surprise that I think we should be constantly pushing the limits, constantly exploring the potential for AI. But running alongside the question of what amazing thing AI can do next is how should it do it and for whose benefit. And that is an entirely human question.

- Mike Wooldridge is Professor of Computer Science at Oxford University and Director of AI at The Alan Turing Institute. The 2023 Christmas Lectures from the Royal Institution – ‘The truth about AI’ – are available on BBC iPlayer.